This Azure AI Studio blog post will show how to create an LLM application, also known as a prompt flow, that extracts numbers from text.

This post will show the end-to-end process of using Azure AI Studio to create an LLM application and will review all the components that make up an LLM application.

Before you start, ensure you can access Azure AI Studio and have configured AI Hub and project. Please visit this post to set up an AI Hub and Project.

Deployment

Once your AI Hub and project are set, it is time to create a deployment of an LLM module.

Open the AI Studio portal

Click on Build

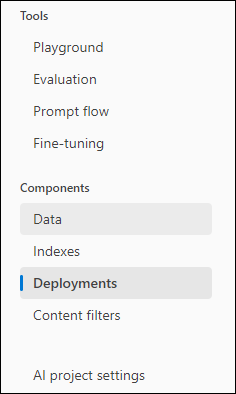

Click on Deployments

Click on Create

Select Real-time endpoint

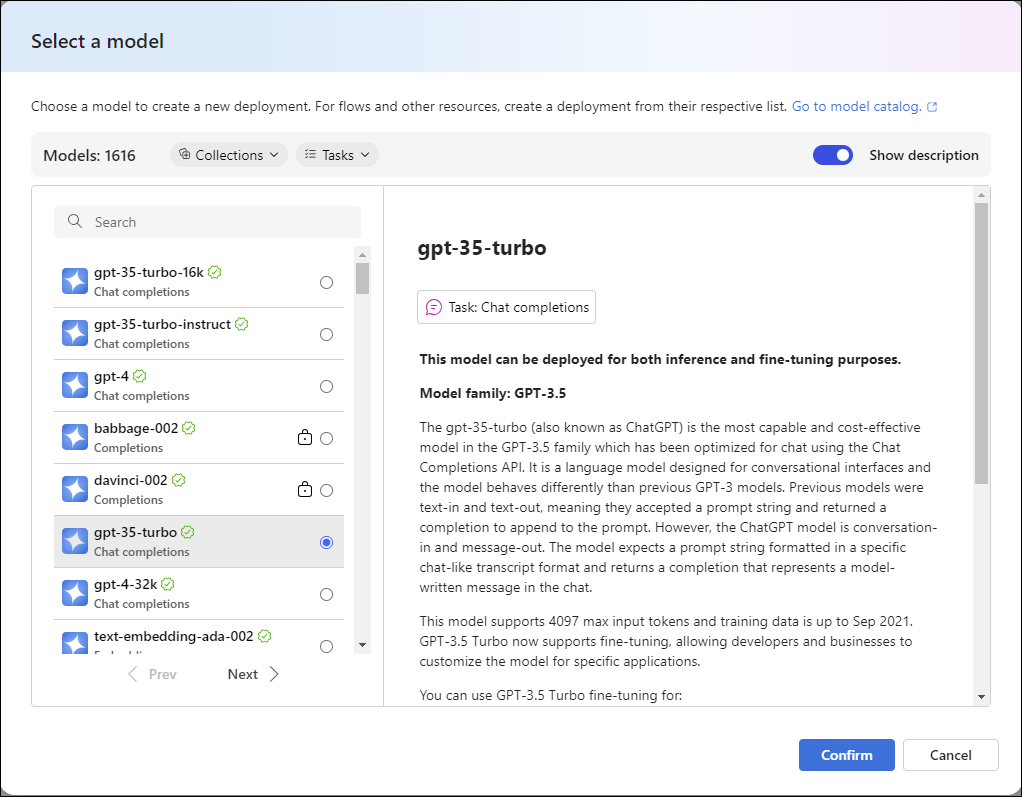

Select the LLM model from the collection

Click Confirm.

The Mechanics of a Flow

In Azure AI Studio terminology, a prompt flow provides an LLM model with an input (prompt), and the LLM generates an output.

In our case, we will provide an LLM with a text string that contains numbers and ask it to extract the numbers from the string. This is a simple example, but you can use the same logic to perform another task.

When creating an LLM application in Azure AI Studio, you need to be aware of all the moving parts. An LLM flow has the following components.

- Input – This prompt we provide for the LLM module, which can contain text, integers, and more.

- Nodes – This is our compute layer that executes the flow.

- Output – Results produced by the LLM.

In our flow, we will use the following tool to process the prompt from the LLM app.

LLM tool – The LLM tool connects the flow to a deployment (ChatGPT module) and handles the prompts against the LLM.

Create a Prompt Flow

To create a prompt flow for an LLM application.

Open AI Studio.

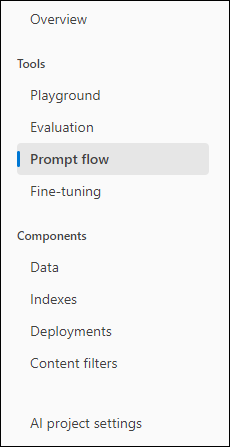

Click on Prompt flow

Select Standard flow from the options.

From the flow page

Start the Runtime to automatic

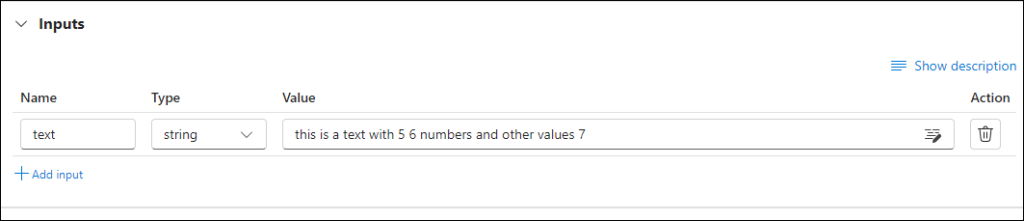

In the input tool, create a string with the following values.

Name: text

Type: string

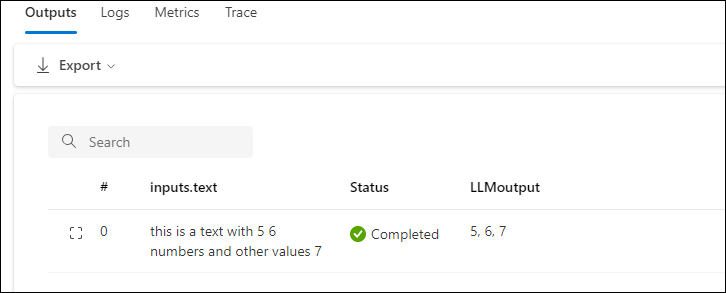

Value: this is a text with 5 6 numbers and other values 7

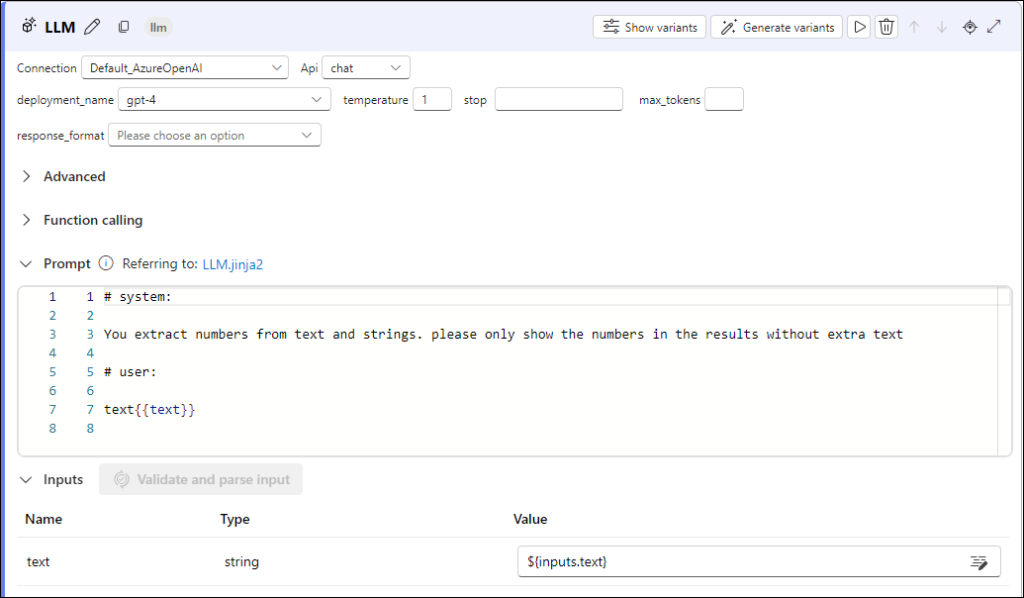

In the LLM tool, rename the current name to LLM and set the prompt to:

# system:

You extract numbers from text and strings. please only show the numbers in the results without extra text.

# user:

text{{text}}

Make sure to set the connection to the deployment and set the API to chat.

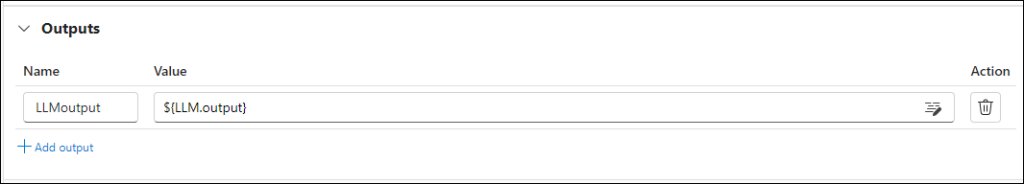

Next, we need to use the Output tool to output the results from the LLM model.

In the Output tool, use the following values.

Name: LLMoutput

Value: ${LLM.output}

Save the flow and click Run

Once the results show up, click on view output.

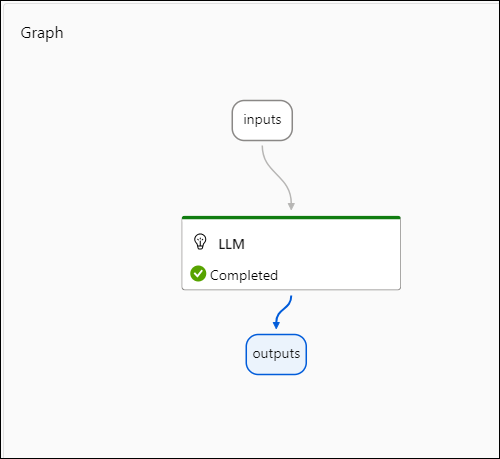

The graph should show the following diagram:

Related Articles

- List All Azure AI LLM Model List With Azure CLI

- How to Delete Multiple Power Automate Flows Using PowerShell

- Get Started with Azure OpenAI .NET SDK

- Prompt for Values With Bicep

- Export Microsoft Teams Phone Numbers Using PowerShell